Corporations are increasingly utilizing mobile enterprise systems to meet their business objectives, allowing mobile devices such as smart phones and tablets to access critical applications on their corporate network. These devices provide advanced technologies over traditional desktop clients, such as: information sharing, access from anywhere at any time, data sensors, location, etc. But what makes these mobile devices desirable, by their very nature, also poses a new set of security challenges. Reports by research agencies in recent years show an alarming trend in mobile security threats listing as top concerns: Android malware attacks, and for the IOS platform issues with enterprise provisioning abuse and older OS versions.

These trends highlight the need for corporations to start taking seriously a mobile security strategy at the same level to which cyber criminals are planning future attacks. A mobile security strategy might involve adopting certain Mobile Security Guidelines as published by standards organizations (NIST) and Mobile OWASP project. See the references at the end of this document:

The following guidelines are a subset of Mobile Security Guidelines I pulled from various published sources with most coming from NIST. It is by no means a comprehensive list, however they can be considered as a starting point or additional considerations for an existing mobile security strategy.

1 – Understand the Mobile Enterprise Architecture

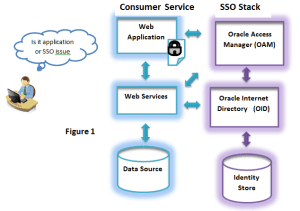

You should start with understanding and diagramming the flow from mobile application to business applications running on the back-end application server. This is a great starting point and should be done at the beginning stages, as most of the security guidelines will depend on what is known about the architecture.

- Is the mobile application a native application or mobile web application? Is it a cross-platform mobile application?

- Does the mobile application use middleware to get to the back-end API, or does it connect directly to a back-end Restful based Web Service?

- Does the mobile application connect to an API gateway?

2 – Diagram the network topology of how the mobile devices connect

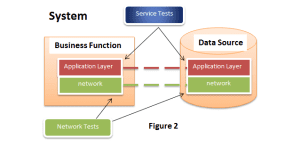

Is the mobile device connecting to the business application servers over the cellular network or internally through a private WiFi network, or both? Does it go through a proxy or firewall? This type of information will aid in developing security requirements; help with establishing a QA security test bed and monitoring capability.

3 – Develop Mobile Application Security Requirements

At a high level, a security function must protect against unauthorized access and in many cases protect privacy and sensitive data. In most cases, building security into mobile applications is not at the top of the mind-set in the software development process. As such, these requirements should be gathered as soon as possible in the Software Development Life Cycle (SDLC). It has been my personal experience in many cases that you have to work with application software developers in adopting best security practices. So the sooner you can get that dialogue going the better. Security objectives to consider are: Confidentiality, integrity, and availability. Can the mobile OS platform provide the security services required? How sensitive is the data you are trying to protect. Should the data be encrypted in transit, and in storage? Do you need to consider data-in-motion protection technologies? Should an Identity and Access Management (IDAM) solution be architected as part of the mobile enterprise system? Should it include a Single Sign On functionality (SSO)? Should there be multi-factor authentication, role based or fine-grained access control? Is Federation required? Should the code be obfuscated to prevent reverse engineering?

4 – Incorporate a Mobile Device Security Policy

What types of mobile devices should be allowed to access the organization’s critical assets. Should you allow personal mobile devices, Bring Your Own Devices (BYOD’s) or consider only organization-issued or certified mobile devices to access certain resources? Should you enforce tiers of access? Centralized mobile device management technologies are a growing solution for controlling the use of both organization-issued and BYOD’s by enterprise users. These technologies can remotely wipe the data or lock the password from a mobile device that has been lost or stolen. Should Enterprises consider anti malware software and OS upgrades to become certified mobiles on the network? To reduce high risk mobile devices, consider technologies that can detect and ban mobile devices that are jail broken or rooted, as these can pose the greatest risk of being compromised by hackers.

5 – Application Security Testing

According to a study performed by The Ponemon Institute, nearly 40% of 400 companies surveyed were not scanning their applications for security vulnerabilities, leaving the door wide open for cyber-attacks. This highlights the urgency for security teams to put together some sort of security vetting process to identify security vulnerabilities and validate security requirements as part of an ongoing QA security testing function. Scanning application technologies typically conduct two types of scanning methods: Static Application Security Testing (SAST) which analyzes the source code and Dynamic Application Security Testing (DAST), which sends modified HTTP requests to a running web application to exploit the application vulnerabilities. As the QA scanning process develops, it can be automated and injected into the software build process to detect security issues in the early phases of the SDLC.

6 – System Threat Model, Risk Management Process

What will typically come out of the application scanning process will be a list of security vulnerabilities found as either noise, suspect or definitive. It will then be up to the security engineers knowing the system architecture and network topology working with the application developer to determine whether the vulnerability results in a valid threat and what risk level based on the impact of a possible security breach. Once the risk for each application is determined, it can be managed through an enterprise risk management system where vulnerabilities are tracked, fixed and the risk brought down to a more tolerable level.

7 – Consider implementing a Centralized Mobile Device Management System

Depending on the Mobile Security Policy that is in place, you may want to consider implementing a Centralized Mobile Device Management System especially when Bring Your Own Device (BYOD) mobiles are in the mix that can:

- For mobile devices, manage certificates, security setting, profiles, etc through a directory service or administration portal.

- Policy based management system to enforce security settings, restrictions for organization-issued, BYOD mobile devices.

- Manage credentials for each mobile device through a Directory Service.

- Self service automation for BYOD and Reducing overall administrative costs.

- Control which applications are installed on organization-issued applications and check for suspect applications on BYOD mobile devices.

- A system that can remotely wipe or lock a stolen or loss phone.

- A system that can detect Jail-broken or rooted mobile devices.

8 – Security Information and Event Management (SIEM)

Monitor mobile device traffic to back-end business applications. Track mobile devices and critical business applications and correlate with events and log information looking for malicious activity based on threat intelligence. On some platforms it may be possible to integrate with a centralized risk management system to specifically be on alert for suspicious mobile events correlated with applications at higher risk.

References:

Today you live with the risks of users accessing many more services outside the corporate network perimeter as well as users carrying many more devices to access these services. Users have too many passwords and the passwords are inherently weak. In fact passwords have become more of an impediment to users than they are protection from hackers and other malevolent individuals and organizations. In short, in many cases, passwords alone cannot be trusted to properly and securely identify users.

Today you live with the risks of users accessing many more services outside the corporate network perimeter as well as users carrying many more devices to access these services. Users have too many passwords and the passwords are inherently weak. In fact passwords have become more of an impediment to users than they are protection from hackers and other malevolent individuals and organizations. In short, in many cases, passwords alone cannot be trusted to properly and securely identify users.