Developing an Entitlements Management Approach

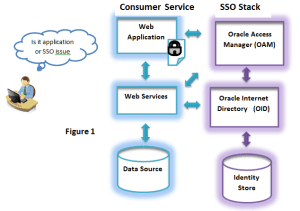

We were sitting down with a client during some initial prioritization discussions in an Identity and Access Management (IAM) Roadmap effort, when the talk turned to entitlements and how they were currently being handled. Like many companies, they did not have a unified approach on how they wanted to manage entitlements in their new world of unified IAM (a.k.a. the end of the 3 year roadmap we were helping to develop). Their definition of entitlements also varied from person to person, much less how they wanted to define and enforce them. We decided to take a step back and really dig into entitlements, entitlement enforcement, and some of the other factors that come into play, so we could put together a realistic enterprise entitlement management approach. We ended up having a really great discussion that touched on many areas within their enterprise. I wanted to briefly discuss a few of the topics that really seemed to resonate with the audience of stakeholders sitting in that meeting room.

(For the purpose of this discussion, entitlements refer to the privileges, permissions or access rights that a user is given within a particular application or group of applications. These rights are enforced by a set of tools that operate based on the defined policies put in place by the organization. Got it?)

- Which Data is the Most Valuable?- There were a lot of dissenting opinions on which pieces of data were the most business critical, which should be most readily available, and which data needed to be protected. As a company’s data is moved, replicated, aggregated, virtualized and monetized, a good Data Management program is critical to making sure that an organization has handle on the critical data questions:

- What is my data worth?

- How much should I spend to protect that data?

- Who should be able to read/write/update this data?

- Can I trust the integrity of the data?

- The Deny Question – For a long time, Least Privilege was the primary model that people used to provide access. It means that an entitlement is specifically granted for access and all other access is denied, thus providing users with exact privilege needed to do their job and nothing more. All other access is implicitly denied. New thinking is out there that says that you should minimize complexity and administration by moving to an explicit deny model that says that everyone can see everything unless it is explicitly forbidden. Granted, this model is mostly being tossed around at Gartner Conferences, but I do think you will see more companies that are willing to loosen their grip on the information that doesn’t need protection, and focus their efforts on those pieces of data that are truly important to their company.

- Age Old Questions – Fine-Grained vs. Coarse-Grained. Roles vs. Rules. Pirates vs. Ninjas. These are questions that every organization has discussed as they are building their entitlements model.

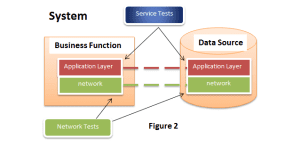

- Should the entitlements be internal to the application or externalized for unified administration?

- Should roles be used to grant access, should we base those decisions on attributes about the users, or should we use some combination?

- Did he really throw Pirates vs. Ninjas in there to see if we were still paying attention? (Yes. Yes, I did).

There are no cut and dry answers for these questions, as it truly will vary from application to application and organization to organization. The important part is to come to a consensus on the approach and then provide the application teams, developers and security staff the tools to manage entitlements going forward.

- Are We Using The Right Tools? – This discussion always warms my heart, as finding the right technical solution for customers IAM needs is what I do for a living. I have my favorites and would love to share them with you but that is for another time. As with the other topics, there really isn’t a cookie cutter answer. The right tool will come down to how you need to use it, what sort of architecture, your selected development platform, and what sort of system performance you require. Make sure that you aren’t trying to make the decisions you make on the topics above based on your selected tool, but rather choose the tool based on the answers to the important questions above.